On a January afternoon in 2020, Robert Williams was at work when he got a call from the Detroit police saying he should turn himself in. Clueless, he asked, “For what?” Because the caller wouldn’t answer his questions about the crime, he assumed it was a joke. But after leaving work and phoning his wife, Melissa, he learned that the police were at his home.

When he approached his driveway, he passed the waiting police car, which pulled in behind him and blocked him in. Again, he asked why he was being arrested. Again, they refused to explain, saying, “You don’t get to ask questions.”

Then, in front of Melissa, their two young daughters (then ages 2 and 5), and the neighborhood, the police handcuffed and arrested Williams.

“When they were handcuffing me, my daughter came outside and I told her to go back in the house,” he says. He tried to reassure her, saying, “I’ll be right back.”

After demanding to see an arrest warrant, he finally learned that he was being accused of felony larceny, but the police did not provide any other details. As they led her husband away, Melissa asked where they were taking him. The detention center in Detroit, they said. When she asked for the center’s phone number, they told her to Google it. None of it made sense. Why were police from Detroit arresting her husband, who lived in suburban Farmington Hills and worked in Rochester Hills? “Are these even real cops?” she wondered.

The confusion assumed Kafkaesque proportions as Williams was processed at the detention center. For more than a day, he sat in a filthy cell with several other men, waiting to learn more about why he was arrested. He received no food and lay on the concrete floor due to overcrowding.

All the while, he knew he had broken his promise to his daughter that he’d return soon.

The following morning, he pleaded not guilty at his arraignment and waived his right to remain silent because an officer said he would explain the arrest if he agreed to speak. During this time, Williams finally learned that facial recognition technology (FRT) had identified him as the person who stole watches from an upscale store in Detroit. He then saw an enlarged still from the surveillance footage.

“I said, ‘That’s not me,’” he says. Seeing another picture, “I said, ‘That’s not me, either. This doesn’t even look like me.’” Eventually, the officers agreed but lacked the authority to release him. He waited eight more hours before he was freed and was told to wait outside for his ride home. On a cold, rainy night, Melissa made her way to an unfamiliar part of Detroit to bring him home, where he found his daughter waiting.

“She was crying and mad,” he says. “She asked why I lied about coming right back.”

Flawed technology

Following his nightmarish arrest, Williams worked with Michigan Law’s Civil Rights Litigation Initiative (CRLI), the ACLU of Michigan, and the national ACLU to make sure that no one else had to experience a similar arrest due to the incorrect results of FRT.

More than four years later, in June, his journey concluded with a first-of-its-kind settlement that requires the Detroit Police Department (DPD) to implement policy changes in its use of FRT. The agreement achieves the nation’s strongest police department policies and practices constraining law enforcement’s use of FRT. (For more details about the settlement, see the "Far-Reaching Impact" section below.)

“I’m grateful and happy it’s over,” Williams says about the circumstances that upended his family’s life. “We didn’t want them to proceed with use of FRT, but we would rather they proceed with these guidelines in the use of the technology [as opposed to having no guidelines at all].”

Between his arrest and the settlement, the DPD falsely arrested two other Black people based on faulty use of FRT. The flaws in the technology are especially pronounced when it is used to identify people of color because most of its algorithms are built by analyzing a data set consisting primarily of white faces. Digital cameras also can fail to provide the degree of color contrast that the FRT algorithm needs to produce and match face prints from photos of darker-skinned people.

According to a 2017 study by the National Institute of Standards and Technology, of 140 face recognition algorithms, rates of false positives are highest in East and West African and East Asian people.

“This effect is generally large, with a factor of 100 more false positives between countries,” the study states. Despite these flaws, police departments around the country continue to use FRT.

“Police face pressure to use whatever tools they can to fight crime,” says Michael J. Steinberg, professor from practice at Michigan Law and CRLI director. “The problem is that technology, especially in the early stages, can be misused. FRT is so inaccurate at identifying people of color that many municipalities have refused to allow their police departments to use it. And unless police officials adopt safeguards such as the ones mandated by the settlement, FRT will continue to result in wrongful arrests and the wholesale violation of innocent people’s civil rights.”

Williams’s case is historic because it is the first in the country where policy changes have been negotiated as part of a settlement. Although it will not abolish the use of FRT, the settlement will ensure that what happened to Williams doesn’t happen to anybody else in Detroit.

“Even if Detroit police continue to use the technology, there will be more protections in place so they can’t just run a search and then arrest someone without using other tools to conduct an investigation,” says Julia Kahn, ’24, one of 18 CRLI student-attorneys who worked on the case.

Nethra Raman, ’24, former CRLI student-attorneyThe Detroit Police Department made numerous mistakes that led to Robert’s arrest.

Sloppy investigative work

According to the complaint the CRLI filed, the DPD adopted the technology without establishing quality control provisions or providing adequate training of personnel. Additionally, in Williams’s case, it relied too heavily on FRT without doing additional investigative work.

Making matters worse, the video was grainy, the perpetrator (who was never caught) wore a hat that partially obscured his face, and the lighting was poor.

“They fed a poor-quality probe image into the Michigan State Police’s facial recognition technology system,” says Steinberg. “And it came back with dozens of possible matches.” From those, the police chose to focus on the photo on Williams’s expired driver’s license.

“His updated driver’s license photo didn’t come up. And, according to the technology, his expired license photo was the ninth-most-likely suspect,” says Steinberg. “Nonetheless, they decided to focus on Williams.”

The police also conducted a lineup of six photographs after they’d identified him through FRT. However, the person who viewed the photos—employed by the store’s security firm—had not actually been present at the time that the robbery occurred and instead based her identification on the poor-quality surveillance footage.

When the CRLI team spoke with the magistrate judge who issued William’s arrest warrant during the course of the civil rights litigation, she said that she was misled by the police in the information the police provided and if she had known all the factors of the investigation, she never would have issued the arrest warrant for Williams. Even police officials, at a July 2020 Detroit Board of Police Commissioners meeting, admitted error in the investigation. The police chief at the time, James Craig, said that “this was clearly sloppy, sloppy investigative work.” For example, the police did not take witness statements from people who were at the store when the theft occurred or collect evidence such as fingerprints or DNA.

Says Steinberg, “Before arresting Williams, the police should have, at minimum, tried to figure out where he was on the day of the theft.”

Williams actually had an alibi: At the time of the crime, which happened in October 2018, he was driving from his job in Rochester Hills to his home in Farmington Hills, an approximately 24-mile route that would have taken him nowhere near the store in Detroit. His proof was in a live stream of his commute home that he had posted on Facebook.

“The Detroit Police Department made numerous mistakes that led to Robert’s arrest,” says Nethra Raman, ’24, a former student-attorney in the CRLI. “We argued that, given the inaccuracy of facial recognition technology, it was unconstitutional to seek a warrant or conduct a photo lineup based on FRT alone.”

Regulating FRT use

All of these issues illustrate a need for rules constraining the use of FRT, says former CRLI student-attorney Collin Christner, ’24.

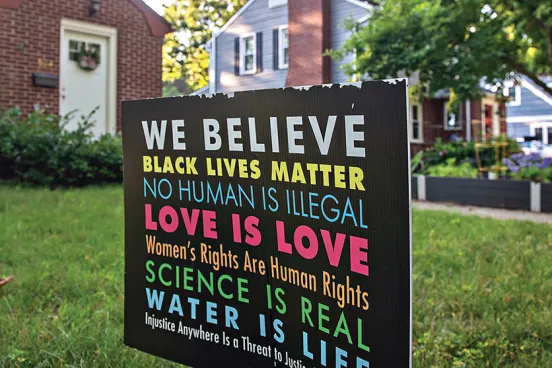

“The growing use of facial recognition technology by police should worry everyone, but particularly because of the intersection between privacy issues and race,” Christner says. “Because facial recognition technology is worse at identifying Black people, this case also raises issues of racially disparate policing.

“We don’t know how this technology is going to develop tomorrow or next year or five years from now, so we need to be thinking proactively,” he adds. “One of the reasons this case is so important is that it is educating lawmakers about the dangers of FRT. We need Congress and state legislatures to enact laws regulating the use of the technology.”

Toward that end, Robert and Melissa Williams have become what she terms “unintentional activists” as they’ve sought to raise awareness about the use and possible dangers of FRT. Robert credits Melissa with learning about the dangers of the technology before he, himself, fully understood it. And he has spoken in hearings around the country to state legislatures that are considering legislation controlling the use of the technology. Williams hopes that his testimony and his efforts to promote awareness in the broader community will translate into action.

“In California, the legislature had previously banned the technology but was thinking of changing that because the legislation was sunsetting,” says Kahn. “Then Robert shared his story. And instead of the bill that would simply allow local police departments to use the technology, the ACLU of California was able to pass a bill that was much more protective of freedoms of individuals and of privacy.”

Four years on, Williams continues to deal with the fallout from the arrest, both legal and personal. He’s not sure the arrest led to a series of strokes he suffered in October 2020, but he has been diagnosed with PTSD stemming from the wrongful arrest. He and Melissa also continue to be concerned about the impact of the arrest on their daughters.

“I would say that it doesn’t just affect the person who is arrested. I have a whole family, and they were also affected by this,” he says. “Four years ago, I would have thought that I was on board with the use of facial recognition technology. But now I think they have a long way to go because there are so many ways that it could go wrong.”